Moderation after the deed always comes too late

By Jordy Nijenhuis, Anand Sheombar & Lisa De Smedt

It’s Monday morning, July 12th, and England didn’t manage to ‘bring football home’. After an intense game of football, which had to be decided through penalties, all that’s left are celebrating Italians, crushed dreams, and a whole bunch of racist comments on social media. The three England players, Saka, Sancho, and Rashford, who saw their penalties either stopped by Italian goalkeeper Donnarumma, or hit the goalpost, were aggressively targeted on social media with racist comments.

Immediately after the incident, we began to collect social media data to analyse the comments. Surprisingly, given the huge amount of data that was collected, there was remarkably little hate speech that got caught by our filter. The lexicon that was used to filter the collected data consisted of about 200 keywords and was made specifically for football related content in the Farenet project (As a comparison: the EOOH lexicons that are being developed will contain thousands of keywords). Since we used this football specific lexicon, we may have missed some generic hate that was still targeted at the players in question, but all the comments that included the names of these players were caught in our data collection.

Therefore, we were left with a couple of plausible reasons for the small amount of hate speech results: either the platforms had already removed most of the racist comments, or it wasn’t as bad as had been reported. The latter is highly unlikely, especially considering the amount of coverage and public outcry on the topic. So, in the case of the EURO 2020 final, we can assume that most of the harmful content directly aimed at Saka, Sancho, and Rashford was removed by major social media platforms such as Twitter and Facebook.

Public outcry seemed to have had an impact on the decision of the platforms to remove the content. In months prior to the games, players and clubs were ‘boycotting’ social media, and the topic of racist abuse had been repeatedly brought up in major news outlets. The amount of attention for online hate speech saw a big surge after the final, with the Prime Minister of the United Kingdom, Boris Johnson, publicly condemning it after initially downplaying the problem.

The racist slurs against these three young black England players caused backlash and public outcry, causing social media platforms to feel pressure. Due to this strong response, the platforms quickly started removing racist content or making it less visible. Whether this was quick enough is still debatable. We’ve heard stories that when hate speech was reported just after the game, people were getting automatic responses that it did not violate the terms of the platform.

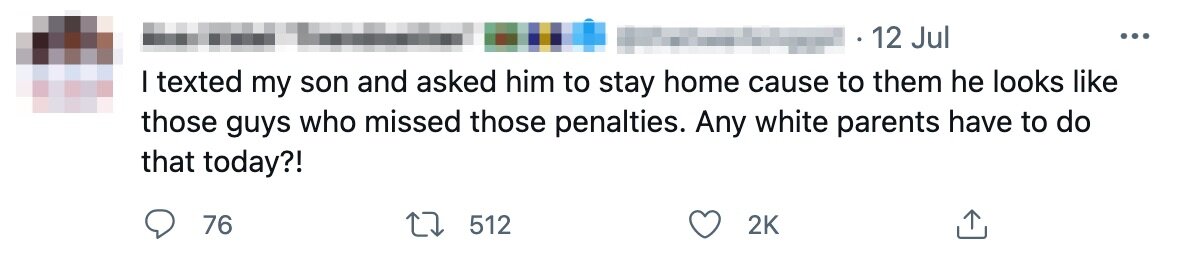

This tweet caught in our data collection by a concerned parent illustrates the impact of online racism in a clear way, and shows that online hate leads to real life harm. Even when it’s removed later.

Still, in the case of the EURO2020 final, it clearly marks a situation where tech firms can respond and take down hateful content when they want to. It is a good thing that these platforms finally decided to take action, however, prevention is still lacking, given that action was only taken for this isolated incident after a lot of public pressure. If we really want to protect communities in the first place and prevent further hate from spreading we should look into new and different policies. Hate speech causes harm in real life, even when it’s removed later. Moderation after the deed always comes too late.